MEISD: A Multimodal Multi-Label Emotion, Intensity and Sentiment Dialogue Dataset for Emotion Recognition and Sentiment Analysis in Conversations

Abstract

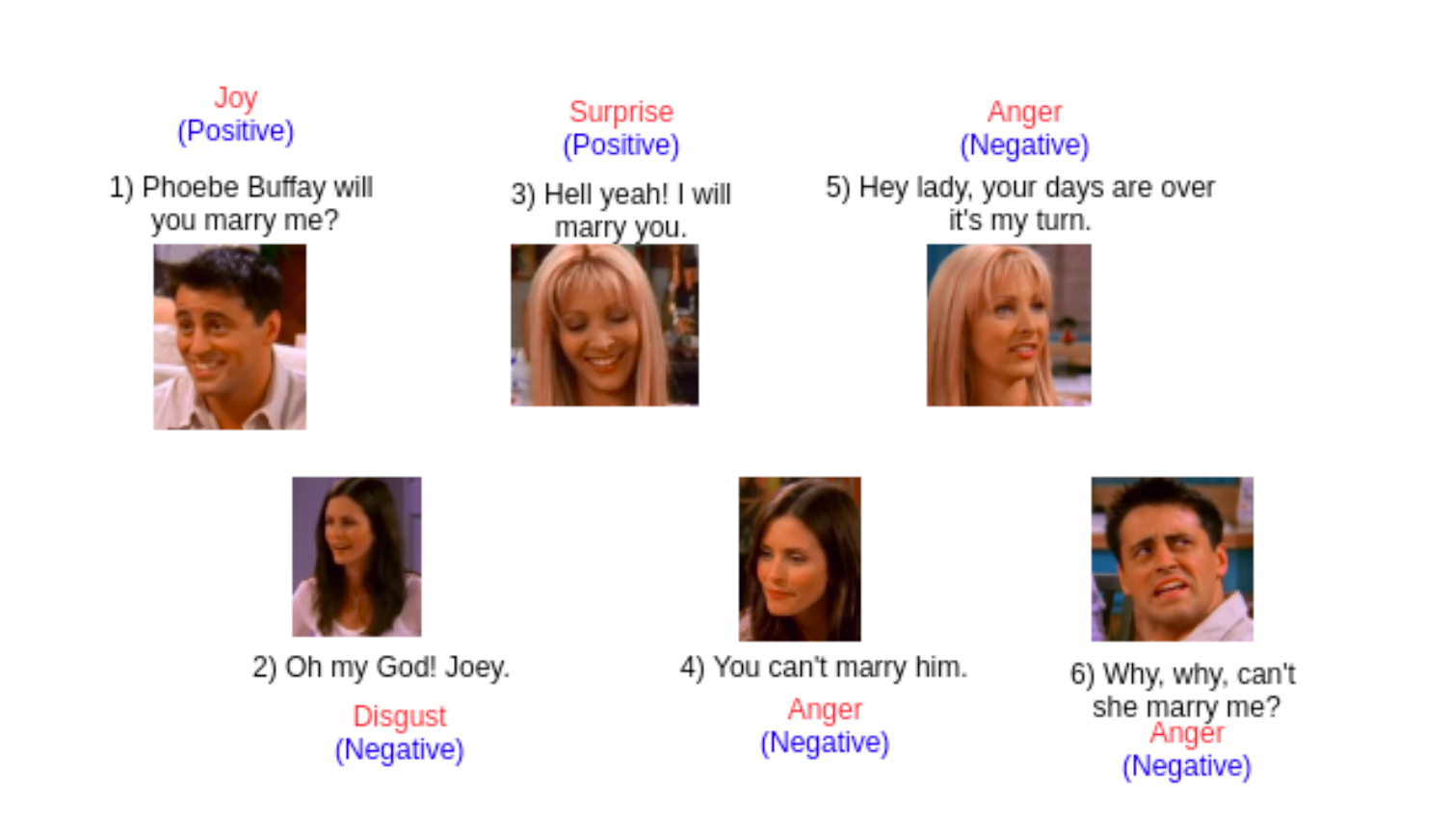

Emotion and sentiment classification in dialogues is a challenging task that has gained popularity in recent times. Humans tend to have multiple emotions with varying intensities while expressing their thoughts and feelings. Emotions in an utterance of dialogue can either be independent or dependent on the previous utterances, thus making the task complex and interesting. Multi-label emotion detection in conversations is a significant task that provides the ability to the system to understand the various emotions of the users interacting. Sentiment analysis in dialogue/conversation, on the other hand, helps in understanding the perspective of the user with respect to the ongoing conversation. Along with text, additional information in the form of audio and video assist in identifying the correct emotions with the appropriate intensity and sentiments in an utterance of a dialogue. Lately, quite a few datasets have been made available for dialogue emotion and sentiment classification, but these datasets are imbalanced in representing different emotions and consist of an only single emotion. Hence, we present at first a large-scale balanced Multimodal Multi-label Emotion, Intensity, and Sentiment Dialogue dataset (MEISD), collected from different TV series that has textual, audio and visual features, and then establish a baseline setup for further research.